Beyond Being Shadowbanned: Instagram's Process of Demoting and Deleting Posts

The Markup, now a part of CalMatters, uses investigative reporting, data analysis, and software engineering to challenge technology to serve the public good. Sign up for Klaxon, a newsletter that delivers our stories and tools directly to your inbox.

إقرأ/ي هذا المقال باللغة العربية

\ For the last seven years, Nabeel Shah has used Instagram as a place to share photos and videos about his life—Valentine’s Day gift ideas, images of his family cooking—and to support his wife, Maryam Ishtiaq, and the small business they co-founded selling halal bone broth. Shah has more than 35,000 followers, but that pales in comparison with Ishtiaq, who has over 300,000.

Automated Censorship

Read the entire series:

- Demoted, Deleted, and Denied: There’s More Than Just Shadowbanning on Instagram

- How We Investigated Shadowbanning on Instagram

- What To Do If You Think You’ve Been Shadowbanned on Instagram

\ Like many Instagram users, Shah also speaks out about issues he cares about, even if it breaks from his normal lifestyle posts, and even if there’s a cost.

\ “Initially, we did not post very heavily about conflicts, and if we did, we did in our Stories, [videos that disappear after a day by default], intermixed with other content,” Shah said. But after the Israel–Hamas war began in October 2023, like many Instagram users and prominent journalists, Shah reported seeing a drop in how many people viewed his Stories any time he posted about Palestine.

\ On Oct. 23, 2023, Shah amplified a post with casualty statistics on the Israel–Hamas war, including Israel’s incarceration of Palestinians in the West Bank. He shared this post in his Stories and added his own message underneath: “There is no Hamas in the West Bank. … Hamas is the excuse, not the reason.” Hamas, designated a terrorist organization by the U.S. in 1997, is based primarily in Gaza, where it exercises a limited governance but has operatives elsewhere, including the West Bank and Lebanon.

\ Instagram deleted Shah’s Story, saying that what he posted supported dangerous organizations and individuals (DOI), and that a one-year strike had been placed on his account. Accumulating strikes results in increasing penalties on the platform.

To Shah, the deletion didn’t make sense, so he requested that Instagram review its decision.

\ “The post in no way was supporting any organization,” he said. “That post was actually just critical of a narrative that’s being propagated, which was the narrative that the Israeli government is only attacking Hamas.

\ “It messes with your head a little bit. … If I want to make something out of [my Instagram account], if I want to create content that reaches people, I can’t speak about this. … That gives a sense of almost hopelessness.”

\ In February, The Markup asked Meta, Instagram’s parent company, why his Story had been deleted. Meta didn’t offer a specific reason, but a December 2023 Human Rights Watch report found “hundreds of cases where the mere neutral mention of Hamas on Instagram and Facebook triggered the DOI policy, prompting the platforms to immediately remove posts, stories, comments, and videos, and restrict accounts that posted them.”

\ Meta spokesperson Dani Lever told The Markup that “[Shah’s] content was mistakenly removed and has since been restored.” A few days later, Shah told The Markup that he no longer had a strike on his account, but he could not find his Story in his Instagram archives. Shah also said Instagram had not notified him of any changes to his account or Stories.

\ “Instagram is not intentionally limiting people’s Stories reach,” Lever said. Referencing a widely reported issue with Stories, she added, “As we previously confirmed, there was a bug that affected people globally—not only those in Israel and Gaza—and that had nothing to [do] with the subject matter of the content, which was fixed in October.”

The Markup reviewed hundreds of screenshots and videos from users like Shah who documented behavior that looked like censorship, analyzed metadata from thousands of accounts and posts, spoke with experts, and interviewed 20 users who believed their content had been blocked or demoted. Using the information we gathered, The Markup then manually tested Instagram’s automated moderation responses on 82 new accounts and 14 existing accounts.

\ Our investigation found that Instagram heavily demoted nongraphic images of war, deleted captions and hid comments without notification, erratically suppressed hashtags, and denied users the option to appeal when the company removed their comments, including ones about Israel and Palestine, as “spam.”

\ The Markup also repeatedly saw “buggy” behavior, no matter whether we were reviewing examples from users or conducting tests on Instagram ourselves: Buttons to appeal deletions didn’t always work, a hashtag sometimes showed more than a hundred posts and other times didn’t show any results, and alerts indicated that the user had a bad internet connection even though there were no other connectivity issues.

\ Instagram has a history of claiming that “bugs” and “glitches” have made people believe that its content moderation is biased, such as when search results favored Donald Trump over Joe Biden during the 2020 election, when posts mentioning abortion were hidden after the Supreme Court overturned Roe v. Wade, and in 2021, when Palestinians had their accounts and posts blocked after posting about evictions from the Sheikh Jarrah neighborhood and the storming of the Al-Aqsa Mosque by Israeli police. (Instagram blamed a global Stories bug then, too.)

\ Since the Israel–Hamas war began, Instagram has been accused of hiding comments of just Palestinian flag emojis, inserting the word “terrorist” into translations of some Palestinian users’ profiles, and censoring posts supporting Palestine. Meta has yet to explain the exact mechanisms responsible for any particular mistake, but it is known that the platform makes conscious, deliberate decisions about how to amplify or suppress content related to the war.

\ Meta normally hides a user’s posts if its systems are 80 percent certain that something counts as “hostile speech” (which includes things such as “harassment and incitement to violence”); in October, The Wall Street Journal reported that the company has since created “temporary risk response measures” that dropped the threshold to 40 percent for certain parts of the Middle East.

\ As TechCrunch has detailed, the platform’s moderation system seems to disproportionately suppress Palestinian users. The Markup found a few accusations of supporters of Israel feeling suppressed, but we did not identify more sweeping evidence through our reporting or testing.

\ “Our policies are designed to give everyone a voice while at the same time keeping our platforms safe,” Lever said. “We’re currently enforcing these policies during a fast-moving, highly polarized and intense conflict, which has led to an increase in content being reported to us. We readily acknowledge errors can be made but any implication that we deliberately and systemically suppress a particular voice is false.”

Demoting Images of War

The Markup attempted to replicate 10 types of censorship reports by Instagram users with more than 70 of our newly created Instagram accounts, curating whom the accounts followed and what they posted. To test what types of accounts or posts Instagram surfaces or demotes, we also created two brand-new hashtags, free of posts from non-Markup Instagram users, and creatively named them #markuptest and #safemarkup. Instagram says that a post’s visibility on hashtag pages may be affected by its content, even if it doesn’t explicitly go against the “Community Guidelines.”

\ After multiple rounds of tests, spaced out over weeks, The Markup found that nongraphic photos depicting the war were 8.5 times more likely than other posts to be hidden from #markuptest.

We designated 20 accounts to post different photos of destroyed buildings, soldiers, and military tanks, all imagery from the Israel–Hamas war, with the #markuptest hashtag. In its community guidelines, Instagram says that it “may remove videos of intense, graphic violence to make sure Instagram stays appropriate for everyone.” None of the images we posted showed people in distress or casualties.

\ Within the first three days of posting, we saw five out of 19 photos show up under the hashtag’s search results. After one week, only six of the 19 war posts had ever appeared. In contrast, across all test accounts, over 95 percent of all other posts showed up on #markuptest at least once.

\ In our second round of testing, we posted the same war photos, along with one additional photo, taken in 2020, of an Israeli tank. Less than a third of the war photos, including the ones from our first round, were ever visible on #markuptest, while 92 percent of other kinds of posts were visible at least once. Instagram did not notify any of our accounts that it was hiding or demoting our posts on hashtag pages.

\ Meta declined to comment on why the war photos seemed less likely to appear than other kinds of photos on a hashtag page, despite the fact that they did not violate Instagram’s guidelines on intense, graphic imagery.

Because users are not notified about images that could be demoted but don’t violate guidelines, Jalaa Abuarab tries to make sure her organization doesn’t publish anything on Instagram that might get it banned or shadowbanned. Abuarab is the editor-in-chief at Dooz, a Palestinian local news outlet based in Nablus, a hilly town in the West Bank known for its cheese. On Dooz’s Instagram and Facebook accounts, local education news, job listings, and notices for lost sheep are interspersed with photos of missing journalists and appeals for blood donations.

\ After the war started, Dooz added new policies on what not to post, to try to lessen its chance of being banned. Images of Israeli tanks, like the ones The Markup tested, are on that list. So are pictures of Israeli checkpoints or photos of soldiers. Despite her efforts, however, Abuarab said, Dooz’s posts still get banned on both Instagram and Facebook. “We are [getting] really good information and not being able to publish it,” she said. “Half of our effort is how to avoid [being] shadowbanned because as a media outlet, our main theme is outreach, we have to reach citizens.”

\ Over time, Abuarab and her team have developed an instinct of what they think will be taken down, and try to get around potential platform censorship by using Arabic algospeak, or code phrases to stymie automated content moderation. “It’s just crazy. It’s like we’re makeup artists,” she said. “How can we put dots here and there?” referring to putting periods in the middle of words (such as “p.a.lestin.ia.n”) to avoid automated moderation. “How can we edit some footage [so] that [it] will not be deleted by Instagram?”

\ Dooz refers to the Gaza Strip as “the region,” which Abuarab said local readers understand but may not be widely understood elsewhere. Instead of “Hamas,” Dooz writes “the Greens,” referring to the green background of the Hamas flag. The news organization never writes words like “occupation,” “Palestine,” or the names of Palestinian political parties, into its posts.

\ Abuarab, however, is worried about the long-term consequences of this technique; when you search for words like “Gaza war,” you won’t find news from outlets like hers, that use algospeak. “It’s horrible because this means … that you are making the Palestinian narrative vanish. … You will not find it. So our work is not archivable. It’s not going to be saved in history, and it’s not going to be used.”

Deleting Captions Without Notice, Hiding Hashtag Posts

Instagram also appears to have censored The Markup’s test accounts in two other ways: deleting our captions without notice and inconsistently suppressing the hashtags we created.

\ When we included the hashtag “#markuptest” in a caption, Instagram sometimes deleted the whole caption, even if it successfully posted the photo to our account. This happened dozens of times across multiple users and accounts. Instagram did not notify any of our accounts that the text of our posts had been altered.

\ Ultimately, we posted more than 200 times to #markuptest, with a serious chunk of posts purposefully representing classic Instagram photos: cute dogs, delicious food, and beautiful scenery. But we also posted protest photos with additional hashtags like #istandwithisrael and #freepalestine and reported some of the accounts for violating community guidelines to test whether any of these factors would trigger some type of ban.

\ We checked #markuptest multiple times a day from a handful of different accounts. At times, we saw more than half of the posts, and at other times, less than a third. Sometimes we only saw a handful of photos. We couldn’t account for why this happened.

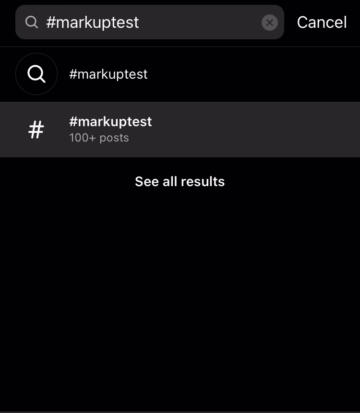

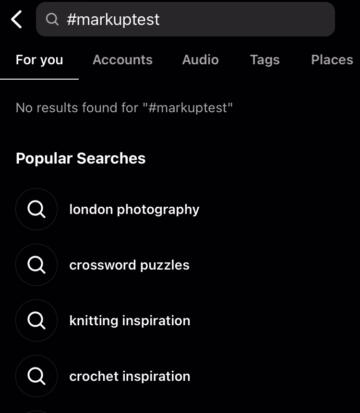

\ Within one week of creating our new hashtag, the #markuptest page became a mirage for some people. On both experiment accounts and genuine Instagram accounts that had not participated in our tests, the hashtag showed up in search, which stated that it had “100+ posts,” but selecting the hashtag then took users to a new screen that said, “No results found for #markuptest.” At the same time, other accounts could view the #markuptest page normally.

Searching for our new hashtag #markuptest sometimes led to strange errors. Screenshots from Feb. 8, 2024, show “#markuptest” appearing in search results with “100+ posts.” Selecting the result took the user to a new screen that said, “No results found for #markuptest.”

Credit: The Markup, Instagram

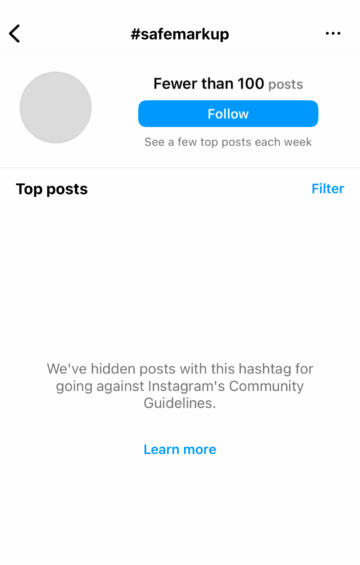

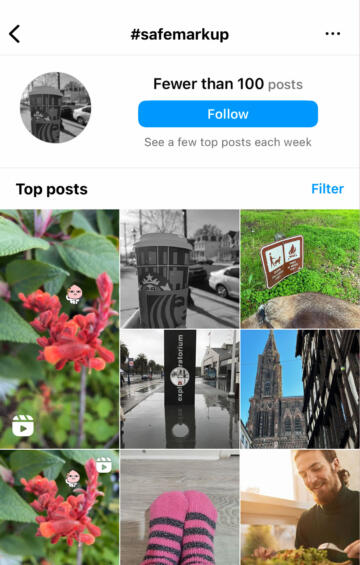

\ The #safemarkup hashtag, which we created to post uncontroversial photos and videos, also became a mirage for some accounts. But at certain times, the hashtag itself was officially restricted. We posted 19 times to #safemarkup, and every post appeared on the hashtag’s page at least once during our experiment.

\ A week after creating the hashtag, we reported 11 posts as spam. At first, these actions didn’t appear to matter, but nearly two weeks later, the hashtag was restricted for the first time. The hashtag’s page was replaced with an error message saying, “We’ve hidden posts with this hashtag for going against Instagram’s Community Guidelines.” Hours later, the hashtag’s page was once again showing posts.

The #safemarkup hashtag page on Feb. 20 and 21, 2024.

Credit: The Markup, Instagram

\ For over a decade, hashtags have become critical to social movements across social media, enough so that Instagram has created ways for users to “support, fundraise and spread the word about the social causes centered directly through hashtags.”

\ When asked whether Instagram deleted captions or suppressed the creation and use of new hashtags, Meta declined to comment and sent us to a help page on deleted posts.

Caught in a Spam Filter with No Way Out

When Shah’s Story was deleted for violating the DOI policy, he was given a link to “Request review” from Instagram if he disagreed with its decision. Shah said the process was “horrendous in the sense that it’s stagnant. Their appeals process is, honestly, just there to be there. … We can’t really follow up. There’s nobody for you to email or discuss with. … It’s no better than a dummy button in my opinion.”

\ The only way users can appeal Instagram’s moderation decisions directly is if it displays a link to “Request review” when it notifies users that they’ve been subject to moderation. If an initial appeal is unsuccessful, users can write to Meta’s Oversight Board, an independent group that makes moderation decisions for both Facebook and Instagram. Since forming in 2019, it has issued 77 case decisions and three policy opinions.

\ Unlike Shah, however, users shared examples with The Markup where they were not given the option to appeal when Instagram censored them. Many of the examples had one factor in common: Instagram had officially categorized what these users posted as “spam.”

\ For example, on a post to Israel’s state Instagram account, one user commented, “If I wasn’t so saddened and horrified at the atrocities you are committing, I would just sit here all day on your profile and laugh my butt off!”

\ Once Instagram deleted the comment as “spam,” it gave the user four examples of what falls into this category:

- Offering Instagram features that don’t exist

- Selling Instagram accounts or usernames

- Claiming others have to like, follow, or share before they can see something

- Sharing links that are harmful or don’t lead to the promised destination

\ The Markup asked Meta if it believed this comment and three others examples were spam, since to a layperson, none seemed to come close to this definition. Lever said that Meta could only comment if we provided the account usernames, which we did not share.

\ In each case, after Instagram labeled what a user wrote as “spam,” the user was not given the option to “Request review.”

\ Instagram’s site says that “for some types of content, you can’t request a review.” However, Lever said that spam can be appealed; she denied that Instagram purposefully categorizes some user content as spam. “If content is taken down for spam, there is a ‘Let us know’ button at the bottom if the user thinks we made a mistake,” she said.

\ But unlike “Request review,” it’s unclear if “Let us know” triggers a review process—or does anything at all. Reports from users and The Markup’s own testing found that the “Let us know” link didn’t always appear. When it did appear and users tried to use it, Instagram didn’t always confirm the feedback was submitted. Sometimes, nothing happened at all, as if the link were broken. Other times, users were redirected to their home feed, as if they had dismissed the notification.

\ Users reported a similar phenomenon to Human Rights Watch, which found that “in over 300 cases, [Facebook and Instagram] users were unable to appeal content or account removal because the appeal mechanism malfunctioned, leaving them with no effective access to a remedy.”

\ In our experiments, we posted comments designed to violate Instagram policies on spam and bullying to our test accounts to trigger deletions and review requests. Two of our bullying accounts got the option to request a review when their comment was deleted. But most of the other spam and bullying comments we posted were shadowbanned—that is, the comments were removed for everyone but the commenter—or placed in a “View hidden comments” section. Instagram didn’t notify any accounts of the changes, and users were not given an option to “Let us know” or “Request review.”

\ On Meta’s own transparency center website, where the company posts reports on community guideline violations for Facebook and Instagram, the “Spam” and “Fake Accounts” reports for Instagram have no data whatsoever. But reports for every other category, including “Dangerous Organizations” and “Bullying and Harassment,” have comprehensive data, including on the number of times users have successfully appealed their violation.

\ “If [Instagram] created a policy that spam doesn’t qualify for review … having a very generous view of what spam is might serve them well,” said Robyn Caplan, an assistant professor at Duke University’s Sanford School of Public Policy who researches platform governance and content moderation. She said that platforms have an incentive to avoid dealing with a high volume of appeals, especially at a time when companies have decimated their trust and safety teams.

Adam Mosseri, head of Instagram, has posted that certain activity on Instagram spikes significantly during “big moments—everything from the World Cup to social unrest.” In comments to us, Lever, Meta’s spokesperson, said that the platform has indeed experienced a rise in reported content since the beginning of the Israel–Hamas war, implying that it has created an especially challenging environment for content moderation. Instagram is clearly trying to adapt; it has changed its processes multiple times in response to users’ reactions to how it’s treating the war on the platform.

\ Robert Gorwa, a postdoctoral researcher at the Berlin Social Science Center and lead author of a 2020 paper about algorithmic content moderation, told The Markup that the incentives “make it very difficult” for big platforms to take bias or unfairness in automated moderation seriously. “It’s the lesser of evils. … [You’re] publicly roasted less for over-removal than under-removal.”

Correction, March 1, 2024

A screenshot of the #markuptest hashtag page was taken on Feb. 21, 2024. A previous version of this story misstated the year.

\

Credits

\

- Natasha Uzcátegui-Liggett, Statistical Journalist

- Tomas Apodaca, Journalism Engineer

Additional Reporting

- Tara García Mathewson

- Mohamed Al Elew

Illustration

- Aya El Tilbani

Art Direction

- Gabriel Hongsdusit

Graphics

- Joel Eastwood

Engagement

- Maria Puertas

Copy Editing

- Emerson Malone

Editing

- Soo Oh

- Sisi Wei

- Michael Reilly

Instagram Testers

- Mohamed Al Elew

- Tomas Apodaca

- Ali Frances

- Miles Hilton

- Gabriel Hongsdusit

- Ramsey Isler

- Nadia Lathan

- Colin Lecher

- Tara García Mathewson

- Soo Oh

- Yichong Qiu

- Ross Teixeira

- Natasha Uzcátegui-Liggett

- Sisi Wei

\ Also published here

\ \

You May Also Like

U.S. Coinbase Premium Turns Negative Amid Asian Buying Surge

Crucial ETH Unstaking Period: Vitalik Buterin’s Unwavering Defense for Network Security